Oracle, MySQL, Cassandra, Hadoop Database Training Classes in Skokie, Illinois

Learn Oracle, MySQL, Cassandra, Hadoop Database in Skokie, Illinois and surrounding areas via our hands-on, expert led courses. All of our classes either are offered on an onsite, online or public instructor led basis. Here is a list of our current Oracle, MySQL, Cassandra, Hadoop Database related training offerings in Skokie, Illinois: Oracle, MySQL, Cassandra, Hadoop Database Training

Oracle, MySQL, Cassandra, Hadoop Database Training Catalog

subcategories

Cassandra Classes

Hadoop Classes

Linux Unix Classes

Microsoft Development Classes

MySQL Classes

Oracle Classes

SQL Server Classes

Course Directory [training on all levels]

- .NET Classes

- Agile/Scrum Classes

- AI Classes

- Ajax Classes

- Android and iPhone Programming Classes

- Blaze Advisor Classes

- C Programming Classes

- C# Programming Classes

- C++ Programming Classes

- Cisco Classes

- Cloud Classes

- CompTIA Classes

- Crystal Reports Classes

- Design Patterns Classes

- DevOps Classes

- Foundations of Web Design & Web Authoring Classes

- Git, Jira, Wicket, Gradle, Tableau Classes

- IBM Classes

- Java Programming Classes

- JBoss Administration Classes

- JUnit, TDD, CPTC, Web Penetration Classes

- Linux Unix Classes

- Machine Learning Classes

- Microsoft Classes

- Microsoft Development Classes

- Microsoft SQL Server Classes

- Microsoft Team Foundation Server Classes

- Microsoft Windows Server Classes

- Oracle, MySQL, Cassandra, Hadoop Database Classes

- Perl Programming Classes

- Python Programming Classes

- Ruby Programming Classes

- Security Classes

- SharePoint Classes

- SOA Classes

- Tcl, Awk, Bash, Shell Classes

- UML Classes

- VMWare Classes

- Web Development Classes

- Web Services Classes

- Weblogic Administration Classes

- XML Classes

- Object-Oriented Programming in C# Rev. 6.1

15 September, 2025 - 19 September, 2025 - Fast Track to Java 17 and OO Development

8 December, 2025 - 12 December, 2025 - Linux Fundaments GL120

22 September, 2025 - 26 September, 2025 - OpenShift Fundamentals

6 October, 2025 - 8 October, 2025 - VMware vSphere 8.0 Skill Up

27 October, 2025 - 31 October, 2025 - See our complete public course listing

Blog Entries publications that: entertain, make you think, offer insight

Is it possible for anyone to give Microsoft a fair trial? The first half of 2012 is in the history books. Yet the firm still cannot seem to shake the public opinion as The Evil Empire that produces crap code.

I am in a unique position. I joined the orbit of Microsoft in 1973 after the Army decided it didn't need photographers flying around in helicopters in Vietnam anymore. I was sent to Fort Lewis and assigned to 9th Finance because I had a smattering of knowledge about computers. And the Army was going to a computerized payroll system.

Bill and Paul used the University of Washington's VAX PDP computer to create BASIC for the Altair computer. Certainly laughable by today's standards, it is the very roots of the home computer.

Microsoft became successful because it delivered what people wanted.

When you think about the black market, I’m sure the majority of you will think of prohibition days. When alcohol was made illegal, it did two things: It made the bad guys more money, and it put the average joe in a dangerous position while trying to acquire it. Bring in the 21stcentury. Sure, there still is a black market… but come on, who is afraid of mobsters anymore? Today, we have a gaming black market. It has been around for years, but will it survive? With more and more games moving towards auction houses, could game companies “tame” the gaming black market?

In the old days of gaming on the internet, we spent most of our online time playing hearts, spades… whatever we could do while connected to the internet. As the years went by, better and better games came about. Then, suddenly, interactive multiplayer games came into the picture. These interactive games, mainly MMORPGS, allowed for characters to pick up and keep randomly generated objects known as “loot”. This evolution of gaming created the black market.

In the eyes of the software companies, the game is only being leased/rented by the end user. You don’t actually have any rights to the game. This is where the market becomes black. The software companies don’t want you making money of “virtual” goods that are housed on the software or servers of the game you are playing on. The software companies, at this point, started to get smarter.

Where there is a demand…

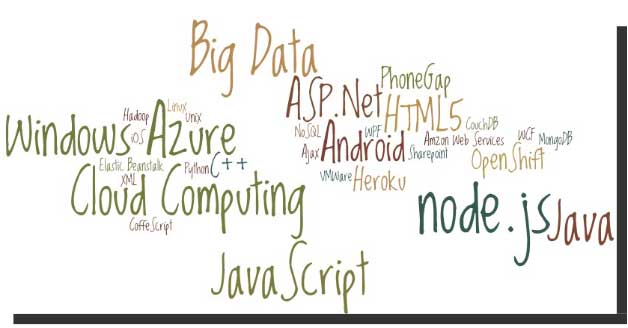

Before we go to the list do you know what makes software skills most sought-after and hence more valuable than most of the other job skills? It is simply because unlike any other skill, software skills are global and are not at all location specific! With the evolution and penetration of internet technologies, the physical distance between the client and service provider hardly matters. So, with more advancement in technology, it is indeed going to rain opportunities on the right skilled developer. I’ll take the liberty to reproduce the following quotes here to prove my claims statistically:

Demand for “cloud-ready” IT workers will grow by 26 percent annually through 2015, with as many as 7 million cloud-related jobs available worldwide.

---IDC White Paper (November 2012).

In the United States, the IT sector is experiencing modest growth of IT jobs in general, with the average growth in IT employment between 1.1 and 2.7 percent per year through 2020.

---U.S. Bureau of Labor Statistics.

Many of us who have iPhones download every interesting app we find on the App Store, especially when they’re free. They can range from a simple payment method app, to a game, to a measurement tool. But, as you may have noticed, our phones become cluttered with tons of pages that we have to swipe through to get to an app that we need on demand. However, with an update by Apple that came out not so long ago, you are able to group your applications into categories that are easily accessible, for all of you organization lovers.

To achieve this grouping method, take a hold of one of the applications you want to categorize. Take a game for example. What you want to do is press your finger on that particular application, and hold it there until all of the applications on the screen begin to jiggle. This is where the magic happens. Drag it over to another game application you want to have in the same category, and release. Your applications should now be held in a little container on your screen. However, a step ago, if you did not have another game application on the same screen, and since you can’t swipe, try putting the held game application on any application you choose, and simply remove that extra application from the list, after moving over another gaming application from a different page.

Tech Life in Illinois

training details locations, tags and why hsg

The Hartmann Software Group understands these issues and addresses them and others during any training engagement. Although no IT educational institution can guarantee career or application development success, HSG can get you closer to your goals at a far faster rate than self paced learning and, arguably, than the competition. Here are the reasons why we are so successful at teaching:

- Learn from the experts.

- We have provided software development and other IT related training to many major corporations in Illinois since 2002.

- Our educators have years of consulting and training experience; moreover, we require each trainer to have cross-discipline expertise i.e. be Java and .NET experts so that you get a broad understanding of how industry wide experts work and think.

- Discover tips and tricks about Oracle, MySQL, Cassandra, Hadoop Database programming

- Get your questions answered by easy to follow, organized Oracle, MySQL, Cassandra, Hadoop Database experts

- Get up to speed with vital Oracle, MySQL, Cassandra, Hadoop Database programming tools

- Save on travel expenses by learning right from your desk or home office. Enroll in an online instructor led class. Nearly all of our classes are offered in this way.

- Prepare to hit the ground running for a new job or a new position

- See the big picture and have the instructor fill in the gaps

- We teach with sophisticated learning tools and provide excellent supporting course material

- Books and course material are provided in advance

- Get a book of your choice from the HSG Store as a gift from us when you register for a class

- Gain a lot of practical skills in a short amount of time

- We teach what we know…software

- We care…